Results for addjoin and refadd are underestimated (see 20180308-NOOR)

Revision of networks of ontologies comparing refine/addjoin/refadd new operators (4 agents; 10000 iterations)

Hypothesis:

Experimental setting: Exactly the same as 20170208-NOOR with:

Experimenter: Jérôme Euzenat (INRIA)

Date: 20170209

Lazy lavender hash: 738f55c3e7dc6fc16ebcfe070adc314dd4703d21

Parameters: params.sh

Command line (script.sh):

java -Dlog.level=INFO -cp lib/lazylav/ll.jar:lib/slf4j/logback-classic-1.1.9.jar:lib/slf4j/logback-core-1.1.9.jar:. fr.inria.exmo.lazylavender.engine.Monitor -DrevisionModality=refine -DnbAgents=4 -DnbIterations=10000 -DnbRuns=10 -DreportPrecRec

java -Dlog.level=INFO -cp lib/lazylav/ll.jar:lib/slf4j/logback-classic-1.1.9.jar:lib/slf4j/logback-core-1.1.9.jar:. fr.inria.exmo.lazylavender.engine.Monitor -DrevisionModality=addjoin -DnbAgents=4 -DnbIterations=10000 -DnbRuns=10 -DreportPrecRec

java -Dlog.level=INFO -cp lib/lazylav/ll.jar:lib/slf4j/logback-classic-1.1.9.jar:lib/slf4j/logback-core-1.1.9.jar:. fr.inria.exmo.lazylavender.engine.Monitor -DrevisionModality=refadd -DnbAgents=4 -DnbIterations=10000 -DnbRuns=10 -DreportPrecRec

Class used: NOOEnvironment, AlignmentAdjustingAgent, AlignmentRevisionExperiment, ActionLogger, AverageLogger, Monitor

Execution environment: Debian Linux virtual machine configured with four processors and 20GB of RAM running under a Dell PowerEdge T610 with 4*Intel Xeon Quad Core 1.9GHz E5-2420 processors, under Linux ProxMox 2 (Debian). - Java 1.8.0 HotSpot

20170209-NOOR-4-10000-addjoin.tsv 20170209-NOOR-4-10000-addjoin.txt 20170209-NOOR-4-10000-refadd.tsv 20170209-NOOR-4-10000-refadd.txt 20170209-NOOR-4-10000-refine.tsv 20170209-NOOR-4-10000-refine.txt

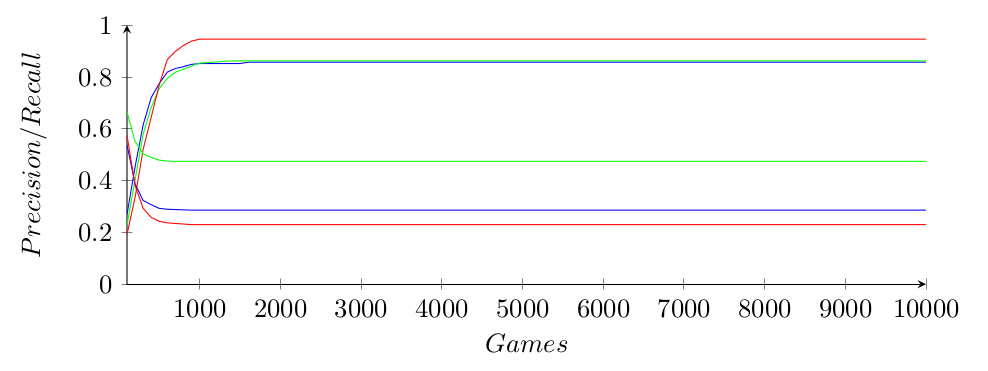

| modality | size | success | convergence | Incoherence degree | Precision | F-measure | Recall |

|---|---|---|---|---|---|---|---|

| refine | 20 | .99 | 919 | .03 | .95 | .37 | .23 |

| addjoin | 24 | .99 | 1523 | .09 | .86 | .43 | .29 |

| refadd | 40 | .99 | 1336 | .09 | .86 | .61 | .47 |

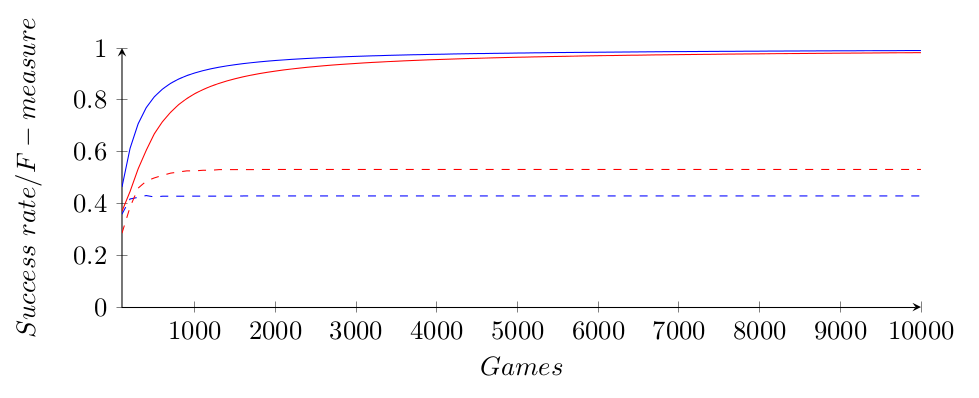

In red the average success rate (over 10 runs) of refine;

In blue the average success rate (over 10 runs) of addjoin;

In green the average success rate (over 10 runs) of refadd;

In dashed, the semantic F-measure.

For dealing with hypothesis H1, we compare with the result of add on 20170208-NOOR:

In blue the average success rate (over 10 runs) of addjoin;

In red the average success rate (over 10 runs) of add;

In dashed, the semantic F-measure.

For dealing with hypothesis H2, we compare with the result of replace on 20170208-NOOR:

In blue the average success rate (over 10 runs) of refine;

In red the average success rate (over 10 runs) of replace;

In dashed, the semantic F-measure.

(drawn from data scaled every 100 measures)

Key points:

Further experiments:

This file can be retrieved from URL https://sake.re/20170209-NOOR

It is possible to check out the repository by cloning https://felapton.inrialpes.fr/cakes/20170209-NOOR.git

This experiment has been transferred from its initial location at https://gforge.inria.fr (not available any more)