2018-11-09:reimplemented a different implentation of strenghening (see 20181109-NOOR)

Designer: Iris Lohja (INRIA) (2018-06-01)

![]()

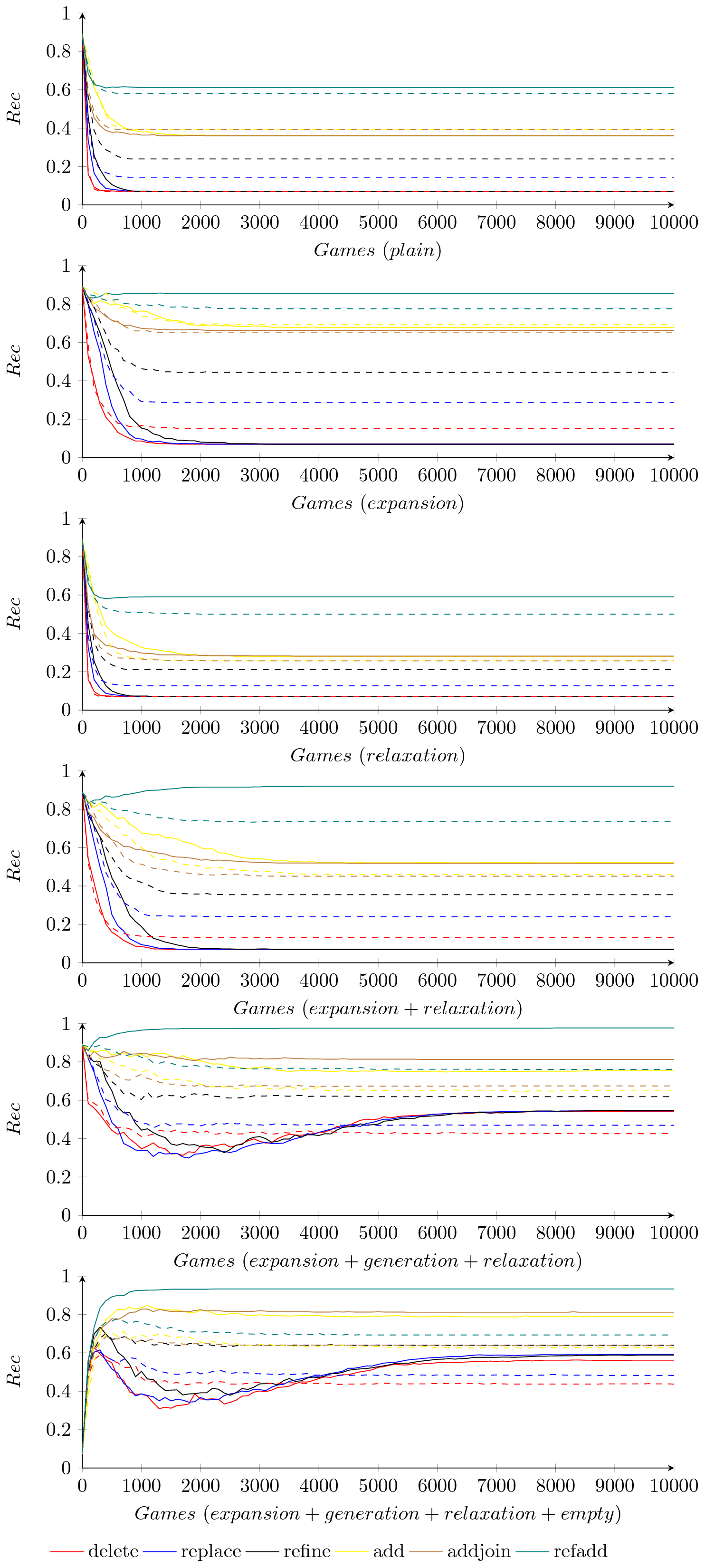

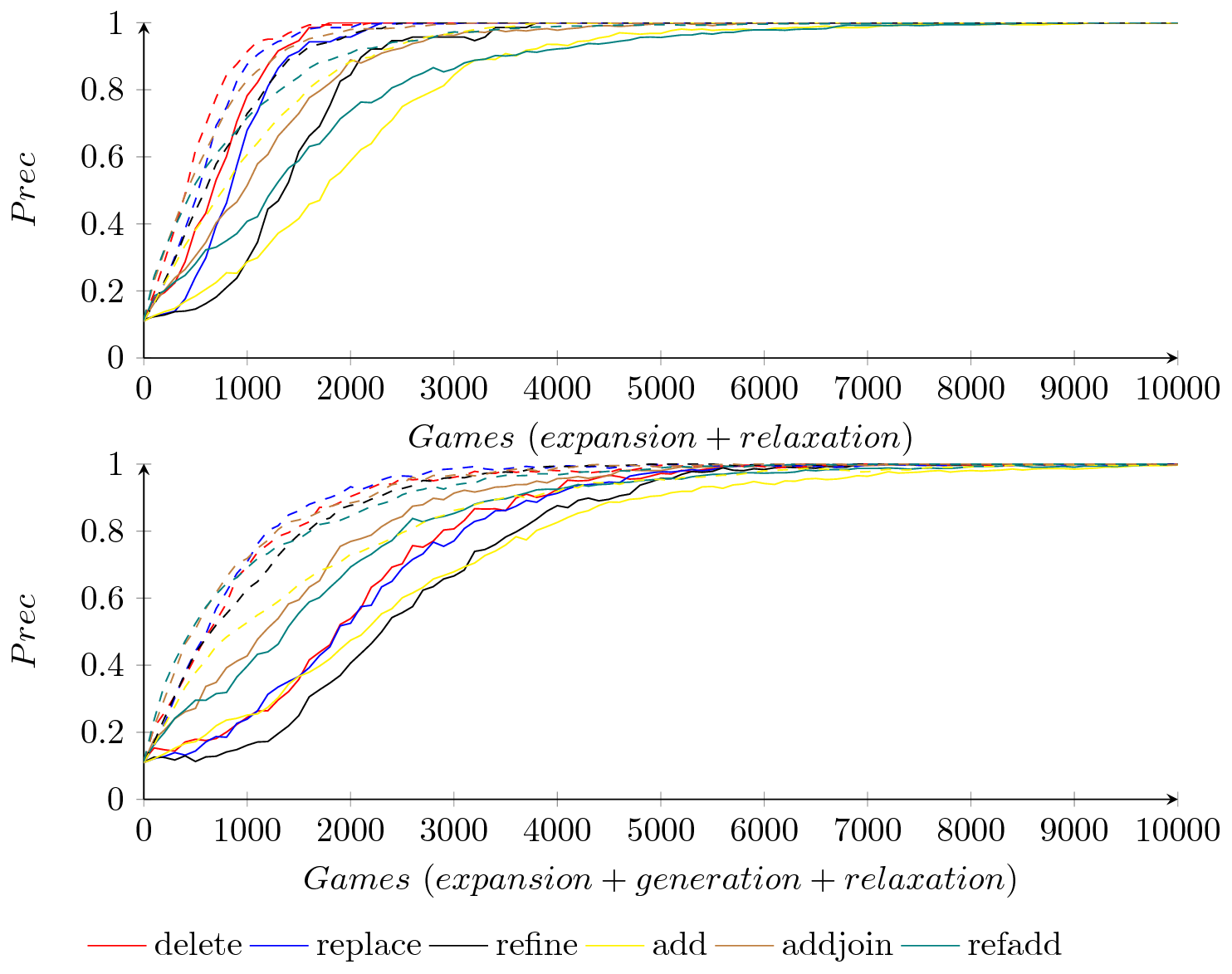

![]() Revision of networks of ontologies with most specific strengthening (4 agents; 10 runs; 10000 games; delete/replace/refine/add/addjoin/refadd)

Revision of networks of ontologies with most specific strengthening (4 agents; 10 runs; 10000 games; delete/replace/refine/add/addjoin/refadd)

Hypotheses: Strengthening (most specific in this case) improves recall over expansion

Experimental setting: Same as 20180601-NOOR replaying the same runs as 20180308-NOOR with most specific strengthening.

Experimenter: Jérôme Euzenat (INRIA) (2018-08-28)

Date: 2018-08-28

Lazy lavender hash: 759ff097b96520c12aa84f3749927f9a22022e62

Classpath: lib/lazylav/ll.jar:lib/slf4j/logback-classic-1.2.3.jar:lib/slf4j/logback-core-1.2.3.jar:.

OS: stretch

Variation of: 20180601-NOOR

Parameters: params.sh

Command line (script.sh):

. params.sh

OUTPUT=${OUTPUT}${LABEL}

for op in ${OPS}

do

bash scripts/runexp.sh -p ${OUTPUT} -d ${DIRPREF}-${op}-${postfix} java -Dlog.level=INFO -cp ${JPATH} fr.inria.exmo.lazylavender.engine.Monitor ${OPT} ${LOADOPT} -DrevisionModality=${op} ${PARAMS}

bash scripts/runexp.sh -p ${OUTPUT} -d ${DIRPREF}-${op}-clever-nr-${postfix} java -Dlog.level=INFO -cp ${JPATH} fr.inria.exmo.lazylavender.engine.Monitor ${OPT} ${LOADOPT} -DrevisionModality=${op} -DexpandAlignments=clever -DnonRedundancy ${PARAMS}

bash scripts/runexp.sh -p ${OUTPUT} -d ${DIRPREF}-${op}-im80-${postfix} java -Dlog.level=INFO -cp ${JPATH} fr.inria.exmo.lazylavender.engine.Monitor ${OPT} ${LOADOPT} -DrevisionModality=${op} -DimmediateRatio=80 ${PARAMS}

bash scripts/runexp.sh -p ${OUTPUT} -d ${DIRPREF}-${op}-clever-nr-im80-${postfix} java -Dlog.level=INFO -cp ${JPATH} fr.inria.exmo.lazylavender.engine.Monitor ${OPT} ${LOADOPT} -DrevisionModality=${op} -DexpandAlignments=clever -DnonRedundancy -DimmediateRatio=80 ${PARAMS}

bash scripts/runexp.sh -p ${OUTPUT} -d ${DIRPREF}-${op}-clever-nr-im80-gen-${postfix} java -Dlog.level=INFO -cp ${JPATH} fr.inria.exmo.lazylavender.engine.Monitor ${OPT} ${LOADOPT} -DrevisionModality=${op} -DexpandAlignments=clever -DnonRedundancy -DimmediateRatio=80 -Dgenerative ${PARAMS}

bash scripts/runexp.sh -p ${OUTPUT} -d ${DIRPREF}-${op}-clever-nr-im80-gen-empty-${postfix} java -Dlog.level=INFO -cp ${JPATH} fr.inria.exmo.lazylavender.engine.Monitor ${OPT} ${LOADOPT} -DrevisionModality=${op} -DexpandAlignments=clever -DnonRedundancy -DimmediateRatio=80 -Dgenerative -DstartEmpty ${PARAMS}

done

Class used: NOOEnvironment, AlignmentAdjustingAgent, AlignmentRevisionExperiment, ActionLogger, AverageLogger, Monitor

Execution environment: 24 * Intel(R) Xeon(R) CPU E5-2420 0 @ 1.90GHz with 20GB RAM / Linux ProxMox 2 / Linux 4.15.17-1-pve / Java Java(TM) SE Runtime Environment 1.8.0_151 with 4.33G max heap size

Note: These experiments are an independent rerun of Iris Lohja (INRIA)'s MSc thesis experiments 1-6. The tables and plots above cover these results and confirm them. Hence the analysis is conform to hers.

Input: Input required for reproducibility can be retrieved from: https://files.inria.fr/sakere/input/expeRun.zip Then unzip expeRun.zip -d input

4-10000-add-clever-nr-im80-gen-empty-strspc.tsv 4-10000-add-clever-nr-im80-gen-empty-strspc.txt 4-10000-add-clever-nr-im80-gen-strspc.tsv 4-10000-add-clever-nr-im80-gen-strspc.txt 4-10000-add-clever-nr-im80-strspc.tsv 4-10000-add-clever-nr-im80-strspc.txt 4-10000-add-clever-nr-strspc.tsv 4-10000-add-clever-nr-strspc.txt 4-10000-add-im80-strspc.tsv 4-10000-add-im80-strspc.txt 4-10000-add-strspc.tsv 4-10000-add-strspc.txt 4-10000-addjoin-clever-nr-im80-gen-empty-strspc.tsv 4-10000-addjoin-clever-nr-im80-gen-empty-strspc.txt 4-10000-addjoin-clever-nr-im80-gen-strspc.tsv 4-10000-addjoin-clever-nr-im80-gen-strspc.txt 4-10000-addjoin-clever-nr-im80-strspc.tsv 4-10000-addjoin-clever-nr-im80-strspc.txt 4-10000-addjoin-clever-nr-strspc.tsv 4-10000-addjoin-clever-nr-strspc.txt 4-10000-addjoin-im80-strspc.tsv 4-10000-addjoin-im80-strspc.txt 4-10000-addjoin-strspc.tsv 4-10000-addjoin-strspc.txt 4-10000-delete-clever-nr-im80-gen-empty-strspc.tsv 4-10000-delete-clever-nr-im80-gen-empty-strspc.txt 4-10000-delete-clever-nr-im80-gen-strspc.tsv 4-10000-delete-clever-nr-im80-gen-strspc.txt 4-10000-delete-clever-nr-im80-strspc.tsv 4-10000-delete-clever-nr-im80-strspc.txt 4-10000-delete-clever-nr-strspc.tsv 4-10000-delete-clever-nr-strspc.txt 4-10000-delete-im80-strspc.tsv 4-10000-delete-im80-strspc.txt 4-10000-delete-strspc.tsv 4-10000-delete-strspc.txt 4-10000-refadd-clever-nr-im80-gen-empty-strspc.tsv 4-10000-refadd-clever-nr-im80-gen-empty-strspc.txt 4-10000-refadd-clever-nr-im80-gen-strspc.tsv 4-10000-refadd-clever-nr-im80-gen-strspc.txt 4-10000-refadd-clever-nr-im80-strspc.tsv 4-10000-refadd-clever-nr-im80-strspc.txt 4-10000-refadd-clever-nr-strspc.tsv 4-10000-refadd-clever-nr-strspc.txt 4-10000-refadd-im80-strspc.tsv 4-10000-refadd-im80-strspc.txt 4-10000-refadd-strspc.tsv 4-10000-refadd-strspc.txt 4-10000-refine-clever-nr-im80-gen-empty-strspc.tsv 4-10000-refine-clever-nr-im80-gen-empty-strspc.txt 4-10000-refine-clever-nr-im80-gen-strspc.tsv 4-10000-refine-clever-nr-im80-gen-strspc.txt 4-10000-refine-clever-nr-im80-strspc.tsv 4-10000-refine-clever-nr-im80-strspc.txt 4-10000-refine-clever-nr-strspc.tsv 4-10000-refine-clever-nr-strspc.txt 4-10000-refine-im80-strspc.tsv 4-10000-refine-im80-strspc.txt 4-10000-refine-strspc.tsv 4-10000-refine-strspc.txt 4-10000-replace-clever-nr-im80-gen-empty-strspc.tsv 4-10000-replace-clever-nr-im80-gen-empty-strspc.txt 4-10000-replace-clever-nr-im80-gen-strspc.tsv 4-10000-replace-clever-nr-im80-gen-strspc.txt 4-10000-replace-clever-nr-im80-strspc.tsv 4-10000-replace-clever-nr-im80-strspc.txt 4-10000-replace-clever-nr-strspc.tsv 4-10000-replace-clever-nr-strspc.txt 4-10000-replace-im80-strspc.tsv 4-10000-replace-im80-strspc.txt 4-10000-replace-strspc.tsv 4-10000-replace-strspc.txt

Here are the results of this experiment for all configurations, they correspond to Table 1-5 of Iris Lohja (INRIA)'s dissertation (the last one was not reproduced there).

| test | success rate |

network size |

incoherence degree |

semantic precision |

semantic F-measure |

semantic recall |

maximum convergence |

|---|---|---|---|---|---|---|---|

| strenghen=specific (compare to 20180308-NOOR) | |||||||

| delete | 1.00 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 789 |

| replace | 0.99 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 1224 |

| refine | 0.99 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 1224 |

| add | 0.97 | 28 | 0.14 | 0.81 | 0.50 | 0.36 | 3192 |

| addjoin | 0.98 | 28 | 0.14 | 0.81 | 0.50 | 0.36 | 2391 |

| refadd | 0.97 | 45 | 0.25 | 0.71 | 0.66 | 0.61 | 3132 |

| expansion + strenghen=specific (compare to 20180529-NOOR) | |||||||

| delete | 0.98 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 1909 |

| replace | 0.97 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 2020 |

| refine | 0.96 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 3062 |

| add | 0.93 | 54 | 0.28 | 0.68 | 0.68 | 0.68 | 5769 |

| addjoin | 0.96 | 52 | 0.29 | 0.66 | 0.66 | 0.66 | 2875 |

| refadd | 0.95 | 68 | 0.34 | 0.63 | 0.72 | 0.85 | 4216 |

| relaxation + strenghen=specific (compare to 20180311-NOOR) | |||||||

| delete | 1.00 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 789 |

| replace | 0.99 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 1224 |

| refine | 0.99 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 1224 |

| add | 0.97 | 23 | 0.00 | 1.00 | 0.43 | 0.28 | 4301 |

| addjoin | 0.98 | 24 | 0.00 | 1.00 | 0.44 | 0.28 | 4904 |

| refadd | 0.97 | 50 | 0.00 | 1.00 | 0.74 | 0.59 | 9060 |

| expansion + relaxation + strenghen=specific (compare to 20180530-NOOR) | |||||||

| delete | 0.98 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 1752 |

| replace | 0.97 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 2212 |

| refine | 0.95 | 6 | 0.00 | 1.00 | 0.13 | 0.07 | 3753 |

| add | 0.92 | 44 | 0.00 | 1.00 | 0.69 | 0.52 | 9275 |

| addjoin | 0.96 | 44 | 0.00 | 1.00 | 0.68 | 0.52 | 6210 |

| refadd | 0.93 | 79 | 0.00 | 1.00 | 0.96 | 0.92 | 9060 |

| expansion + generation + relaxation + strenghen=specific (compare to 20180601-NOOR) | |||||||

| delete | 0.90 | 56 | 0.00 | 1.00 | 0.70 | 0.54 | 8720 |

| replace | 0.89 | 56 | 0.00 | 1.00 | 0.71 | 0.55 | 8669 |

| refine | 0.87 | 57 | 0.00 | 1.00 | 0.71 | 0.55 | 7553 |

| add | 0.87 | 64 | 0.00 | 1.00 | 0.86 | 0.75 | 9926 |

| addjoin | 0.92 | 69 | 0.00 | 1.00 | 0.90 | 0.81 | 8095 |

| refadd | 0.92 | 84 | 0.00 | 1.00 | 0.99 | 0.98 | 9901 |

| expansion + generation + empty + relaxation + strenghen=specific (compare to 20180827-NOOR) | |||||||

| delete | 0.89 | 65 | 0.00 | 1.00 | 0.72 | 0.56 | 8658 |

| replace | 0.89 | 65 | 0.00 | 1.00 | 0.74 | 0.59 | 8912 |

| refine | 0.86 | 65 | 0.00 | 1.00 | 0.74 | 0.59 | 9161 |

| add | 0.88 | 74 | 0.00 | 0.99 | 0.88 | 0.79 | 9876 |

| addjoin | 0.93 | 75 | 0.00 | 1.00 | 0.90 | 0.81 | 8338 |

| refadd | 0.92 | 86 | 0.00 | 1.00 | 0.97 | 0.93 | 8553 |

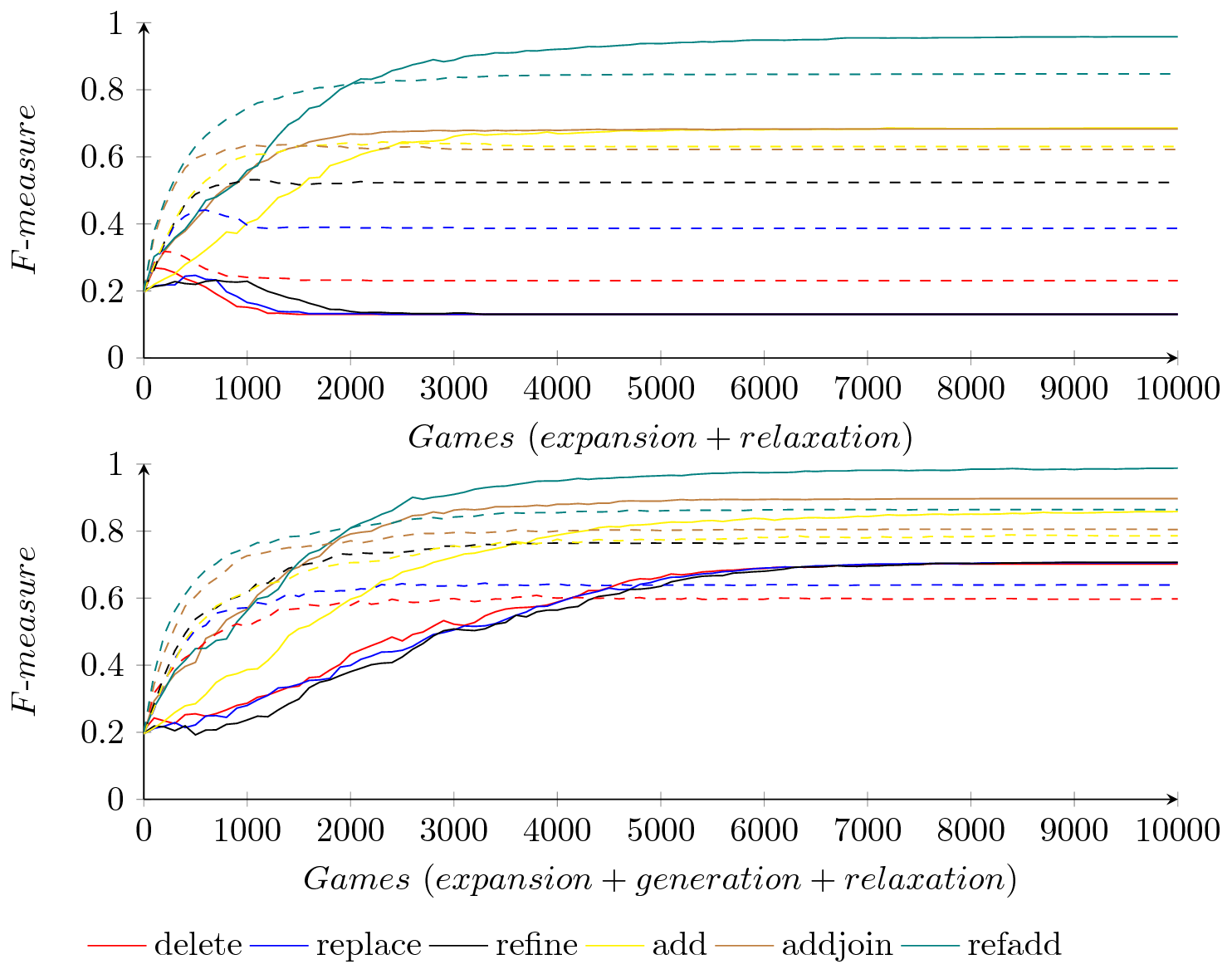

Each plot compares a measure for most specific strengthening (plain) with one without it (dashed). The data source for the corresponding dashed curves is the one indicated in the tables above

Since the goal of strengthening is to increase recall, we first display the effect of recall in all runs:

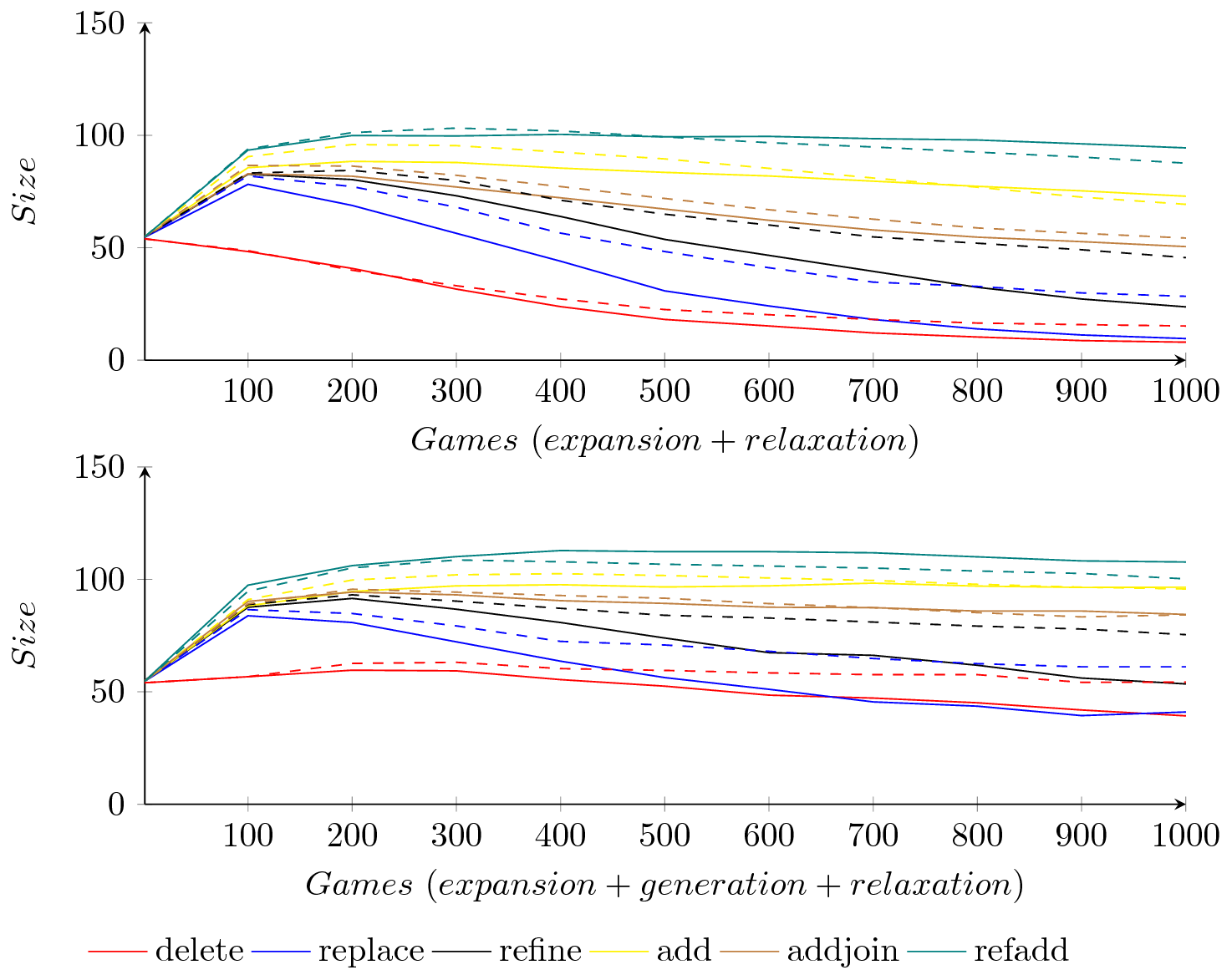

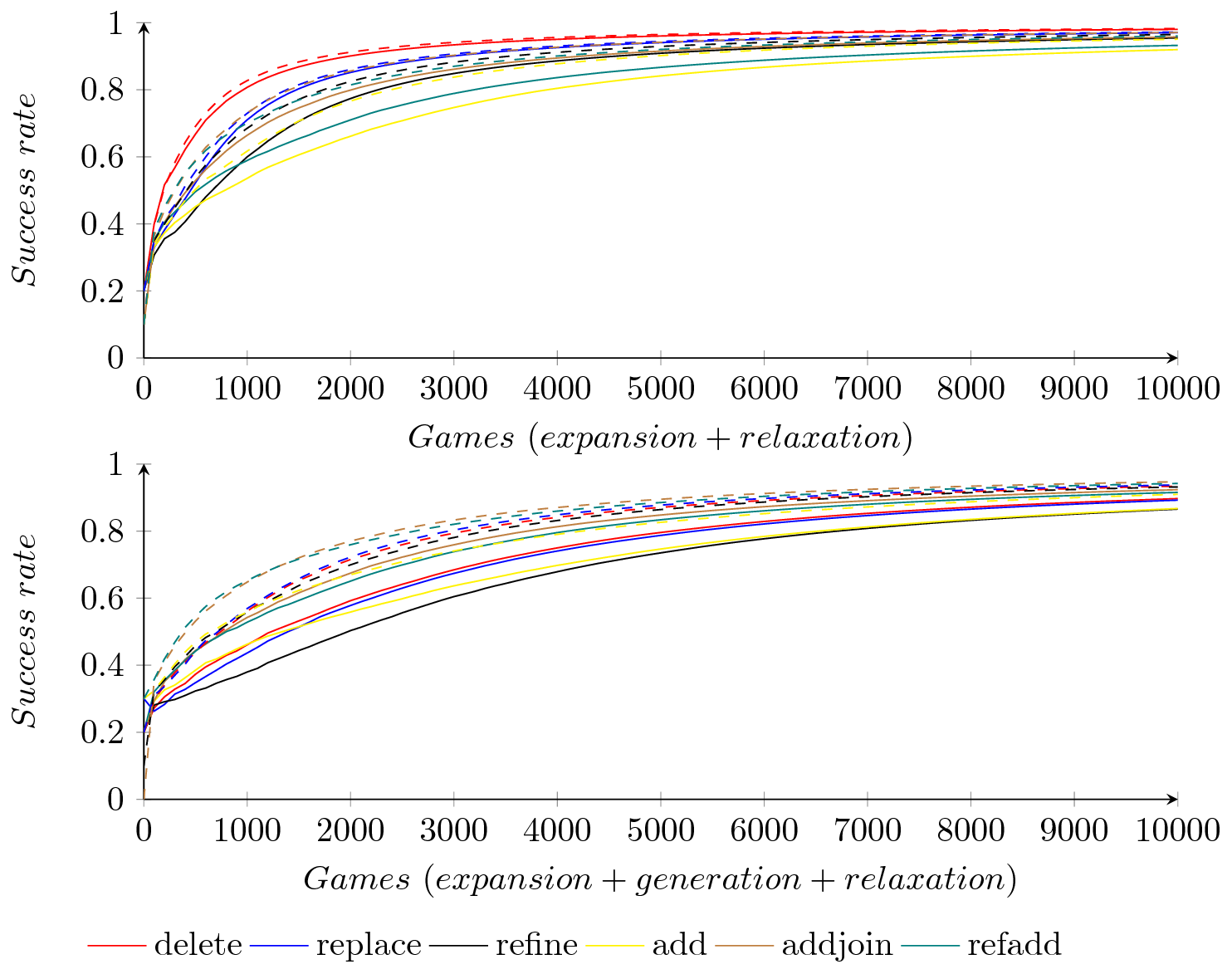

In the following, we compare on the two specific runs highlighting the effect of generation over expansion. The upper plot is for the expansion and relaxation modalities, the lower one with expansion, generation and relaxation.

Analyst: Jérôme Euzenat (INRIA) (2018-09-13)

Key points:

On delete, replace and refine, strengthening has a negative influence when 'generation' is not used. This is natural since a correct correspondence is replaced by an eventually incorrect one but when this one is found incorrect, there is no way to recover the previous one (this require 'add').

When used with generation, these three operators enjoy an improved recall.

On the other side of the spectrum, the refadd operator always improves recall, the is not the case for add and addjoin which only improve their recall when relaxation is available. This remains to be explained as relaxation is supposed to improve precision (which it does). It seems that

As already observed, there is little difference between starting empty and random networks. The results with random networks contain less correspondences, likely due to the non realistic size of initial networks.

refadd with expansion + generation + relaxation + strenghen=specific achieves remarkable results with .99 and .97 F-measure.

Some tests have a late convergence rank, hence it is likely that convergence has not been achieved.

Further experiments:

This file can be retrieved from URL https://sake.re/20180828-NOOR

It is possible to check out the repository by cloning https://felapton.inrialpes.fr/cakes/20180828-NOOR.git

This experiment has been transferred from its initial location at https://gforge.inria.fr (not available any more)

The original, unaltered associated zip file can be obtained from https://files.inria.fr/sakere/gforge/20180828-NOOR.zip